- #TRIGGER AIRFLOW DAG VIA API INSTALL#

- #TRIGGER AIRFLOW DAG VIA API UPDATE#

- #TRIGGER AIRFLOW DAG VIA API MANUAL#

The API or Operator would be options if you are looking to let the external party indirectly trigger it themselves. You'll probably go with the UI or CLI if this is truly going to be 100% manual. Operator: Use the TriggerDagRunOperator, see docs in and an example in.user14808811 Its listed in the documentation I shared.

#TRIGGER AIRFLOW DAG VIA API UPDATE#

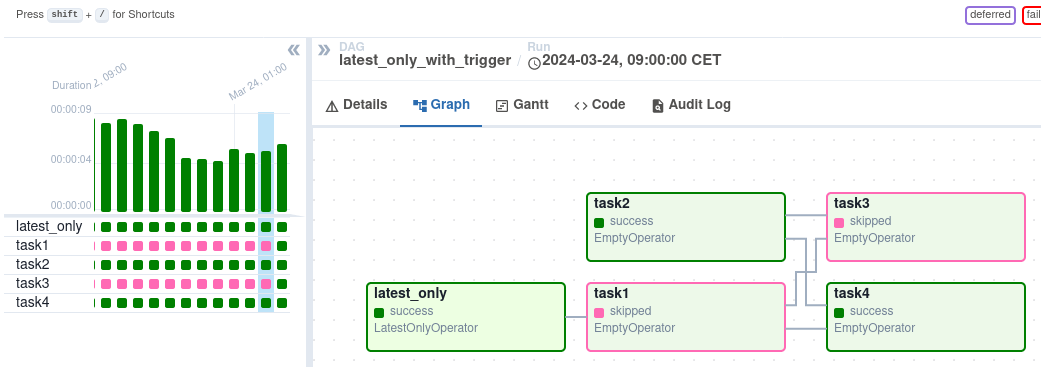

You will need to use several endpoints for that ( List DAGs, Trigger a new DAG run, Update a DAG) In Airflow<2.0 you can do some of that using the experimental API. API: Call POST /api/experimental/dags//dag_runs, see docs in. In Airflow>2.0 you can do that with the Rest API.Successful execution of this task requires a separate conda environment in which airflow is installed. Note that later versions of airflow use the syntax airflow dags trigger Task wrapper for triggering an Airflow DAG run. CLI: Run airflow trigger_dag, see docs in.UI: Click the "Trigger DAG" button either on the main DAG or a specific DAG.Then you can call the connection by matching the _CONN_ID. Task_id='run_process_with_external_files', Invoke_lambda_function = AwsLambdaInvokeFunctionOperator( The DAGs that are un-paused can be found in the Active tab.

In the UI, you can see Paused DAGs (in Paused tab). I want to trigger this function in Airflow, I have found this code: import boto3, json, typing def invokeLambdaFunction (, functionName:strNone, payload:typing.Mapping str, strNone): if functionName None: raise Exception ERROR: functionName parameter cannot be.

#TRIGGER AIRFLOW DAG VIA API MANUAL#

Paused DAG is not scheduled by the Scheduler, but you can trigger them via UI for manual runs. This function queries an external API and places 2 csv files in S3 bucket. If 'Content-Length' not in recent_header.keys():Ĭonn.hmset("header_dict", current_header) The pause and unpause actions are available via UI and API. Recent_header = conn.hgetall("header_dict") Key.decode() if isinstance(key, bytes) else key: code() if isinstance(value, bytes) else valueįor key, value in ()Ĭonn = redis.Redis(host='redis', port=6379) """ uses redis to check if a new file is on the server""" AWS CLI Quick configuration with aws configure. Use an alternative auth backend if you need automated access to the API, up to cooking your own.

#TRIGGER AIRFLOW DAG VIA API INSTALL#

To complete the steps on this page, you need the following: AWS CLI Install version 2. api authbackends .session So your browser can access the API because it probably keeps a cookie-based session but any other client will be unauthenticated. """ DAG for operational District heating """įrom .operators.aws_lambda import AwsLambdaInvokeFunctionOperatorįrom .http import HttpSensor The AWS Command Line Interface (AWS CLI) is an open source tool that enables you to interact with AWS services using commands in your command-line shell. So essentially there is no way we can handle trigger Airflow's dags from webhooks via Freshdesk. Currently, I'm having a standalone Airflow application. In my current example, I am using redis to persist the current file state. Thanks So I have to deploy another application(say Flask-based webserver) where we gather the events from the webhook and trigger the RESTAPI for the airflow. HttpSensor works only once during the scheduled time window. )īut Airflow requires a defined data_interval. The basic concept of Airflow does not allow triggering a Dag on an irregular interval.Īctually, I want to trigger a dag every time a new file is placed on a remote server (like HTTPS, sftp, s3.

0 kommentar(er)

0 kommentar(er)